publications

publications by categories in reversed chronological order. generated by jekyll-scholar.

2025

-

Revisiting Concept Drift in Windows Malware Detection: Adaptation to Real Drifted Malware with Minimal SamplesAdrian Shuai Li, Arun Iyengar, Ashish Kundu, and Elisa BertinoIn Network and Distributed System Security (NDSS) Symposium, 2025

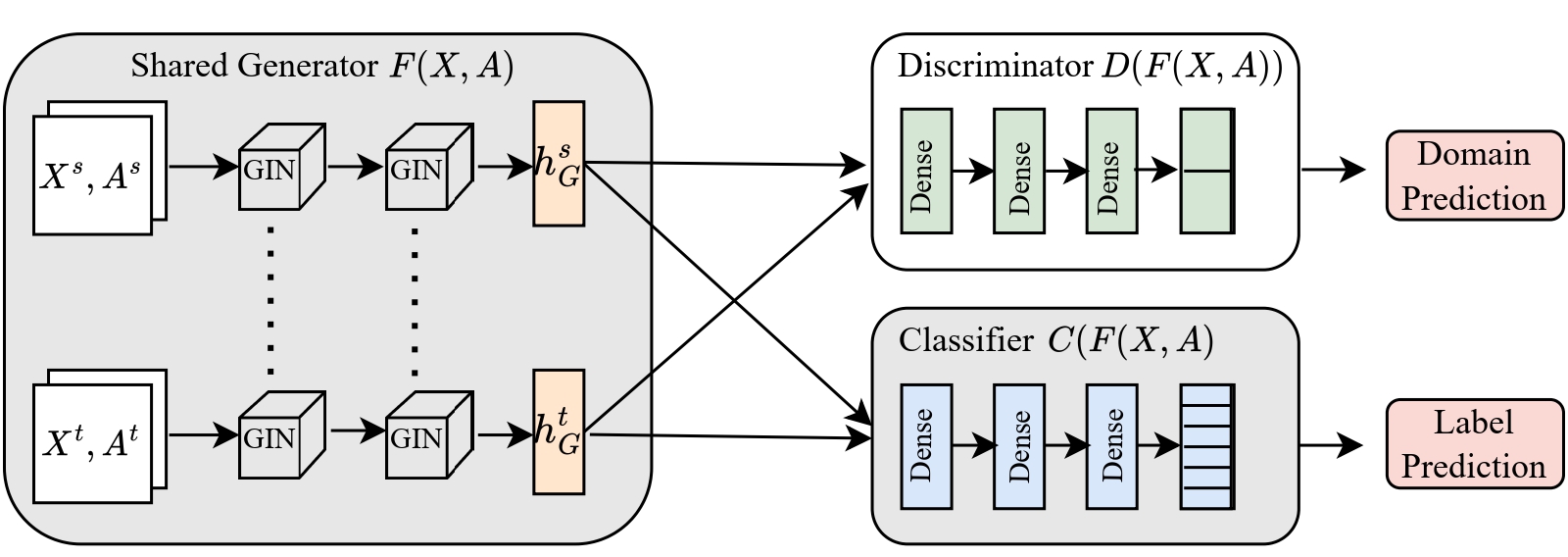

Revisiting Concept Drift in Windows Malware Detection: Adaptation to Real Drifted Malware with Minimal SamplesAdrian Shuai Li, Arun Iyengar, Ashish Kundu, and Elisa BertinoIn Network and Distributed System Security (NDSS) Symposium, 2025In applying deep learning for malware classification, it is crucial to account for the prevalence of malware evolution, which can cause trained classifiers to fail on drifted malware. Existing solutions to address concept drift use active learning. They select new samples for analysts to label and then retrain the classifier with the new labels. Our key finding is that the current retraining techniques do not achieve optimal results. These techniques overlook that updating the model with scarce drifted samples requires learning features that remain consistent across pre-drift and post-drift data. The model should thus be able of disregarding specific features that, while beneficial for the classification of pre-drift data, are absent in post-drift data, thereby preventing prediction degradation. In this paper, we propose a new technique for detecting and classifying drifted malware that learns drift-invariant features in malware control flow graphs by leveraging graph neural networks with adversarial domain adaptation. We compare it with existing model retraining methods in active learning-based malware detection systems and other domain adaptation techniques from the vision domain. Our approach significantly improves drifted malware detection on publicly available benchmarks and real-world malware databases reported daily by security companies in 2024. We also tested our approach in predicting multiple malware families drifted over time. A thorough evaluation shows that our approach outperforms the state-of-the-art approaches.

@inproceedings{li2025revisiting, title = {Revisiting Concept Drift in Windows Malware Detection: Adaptation to Real Drifted Malware with Minimal Samples}, author = {Li, Adrian Shuai and Iyengar, Arun and Kundu, Ashish and Bertino, Elisa}, booktitle = {Network and Distributed System Security (NDSS) Symposium}, year = {2025}, } -

Maximizing Information in Domain-Invariant Representation Improves Transfer LearningAdrian Shuai Li, Elisa Bertino, Xuan-Hong Dang, Ankush Singla, and 2 more authorsIn 2025 IEEE 11th International Conference on Collaboration and Internet Computing (CIC). To Appear , 2025

Maximizing Information in Domain-Invariant Representation Improves Transfer LearningAdrian Shuai Li, Elisa Bertino, Xuan-Hong Dang, Ankush Singla, and 2 more authorsIn 2025 IEEE 11th International Conference on Collaboration and Internet Computing (CIC). To Appear , 2025Best Paper Award

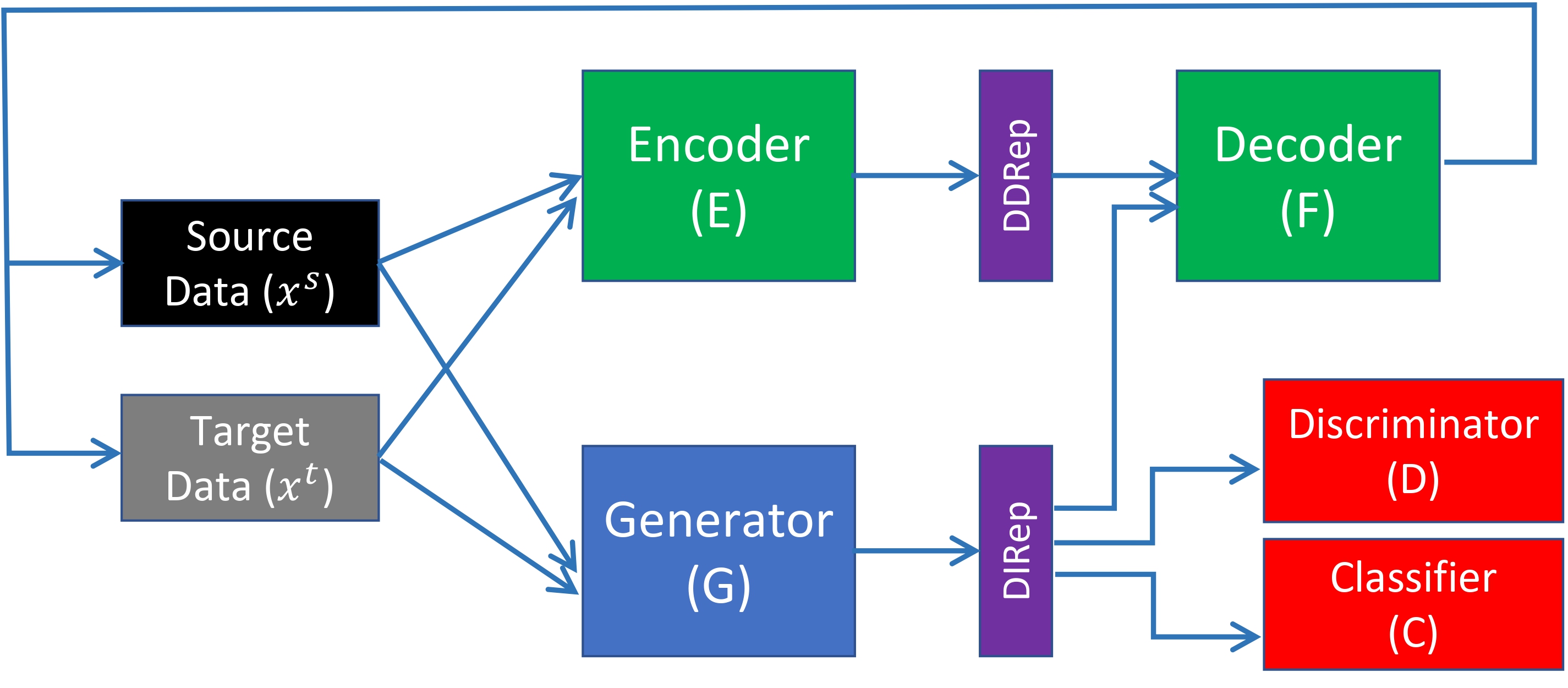

We propose MaxDIRep, a domain adaptation method that improves the decomposition of data representations into domain‑independent and domain‑dependent components. Existing methods, such as Domain‑Separation Networks (DSN), use a weak orthogonality constraint between these components, which can lead to label‑relevant features being partially encoded in the domain‑dependent representation (DDRep) rather than the domain‑independent representation (DIRep). As a result, information crucial for target‑domain classification may be missing from the DIRep. MaxDIRep addresses this issue by applying a Kullback‑Leibler (KL) divergence constraint to minimize the information content of the DDRep, thereby encouraging the DIRep to retain features that are both domain‑invariant and predictive of target labels. Through geometric analysis and an ablation study on synthetic datasets, we show why DSN’s weaker constraint can lead to suboptimal adaptation. Experiments on standard image benchmarks and a network intrusion detection task demonstrate that MaxDIRep achieves strong performance, works with pretrained models, and generalizes to non‑image classification tasks.

@inproceedings{li2025maximal, author = {Li, Adrian Shuai and Bertino, Elisa and Dang, Xuan-Hong and Singla, Ankush and Tu, Yuhai and Wegman, Mark N.}, title = {Maximizing Information in Domain-Invariant Representation Improves Transfer Learning}, booktitle = {2025 IEEE 11th International Conference on Collaboration and Internet Computing (CIC)}, publisher = {IEEE}, year = {2025} } -

LLMalMorph: On The Feasibility of Generating Variant Malware using Large-Language-ModelsMd Ajwad Akil, Adrian Shuai Li, Imtiaz Karim, Arun Iyengar, and 3 more authorsIn 2025 IEEE 7th International Conference on Trust, Privacy and Security in Intelligent Systems, and Applications (TPS). To Appear , 2025

LLMalMorph: On The Feasibility of Generating Variant Malware using Large-Language-ModelsMd Ajwad Akil, Adrian Shuai Li, Imtiaz Karim, Arun Iyengar, and 3 more authorsIn 2025 IEEE 7th International Conference on Trust, Privacy and Security in Intelligent Systems, and Applications (TPS). To Appear , 2025Best Paper Award

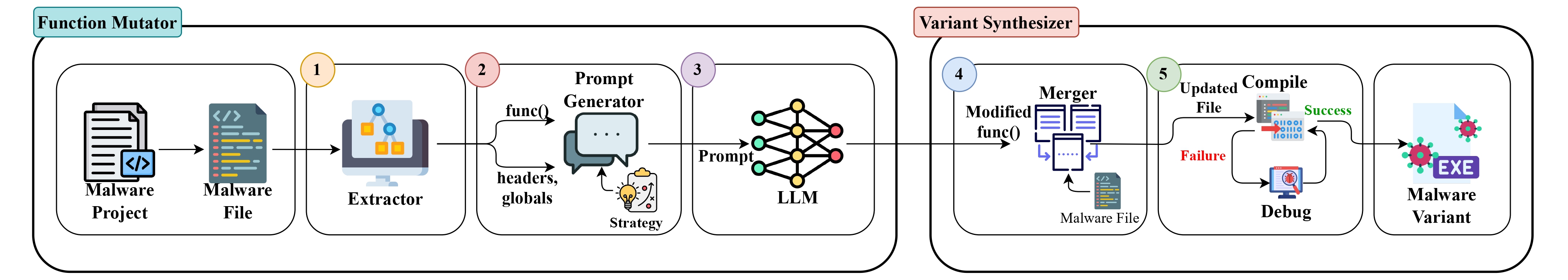

Large Language Models (LLMs) have transformed software development and automated code generation. Motivated by these advancements, this paper explores the feasibility of LLMs in modifying malware source code to generate variants. We introduce LLMalMorph, a semi-automated framework that leverages semantical and syntactical code comprehension by LLMs to generate new malware variants. LLMalMorph extracts function-level information from the malware source code and employs custom-engineered prompts coupled with strategically defined code transformations to guide the LLM in generating variants without resource-intensive fine-tuning. To evaluate LLMalMorph, we collected 10 diverse Windows malware samples of varying types, complexity and functionality and generated 618 variants. Our thorough experiments demonstrate that it is possible to reduce the detection rates of antivirus engines of these malware variants to some extent while preserving malware functionalities. In addition, despite not optimizing against any Machine Learning (ML)-based malware detectors, several variants also achieved notable attack success rates against an ML-based malware classifier. We also discuss the limitations of current LLM capabilities in generating malware variants from source code and assess where this emerging technology stands in the broader context of malware variant generation.

@inproceedings{akil2025llmalmorph, title = {LLMalMorph: On The Feasibility of Generating Variant Malware using Large-Language-Models}, author = {Akil, Md Ajwad and Li, Adrian Shuai and Karim, Imtiaz and Iyengar, Arun and Kundu, Ashish and Parla, Vinny and Bertino, Elisa}, booktitle = {2025 IEEE 7th International Conference on Trust, Privacy and Security in Intelligent Systems, and Applications (TPS)}, publisher = {IEEE}, year = {2025} } -

LFreeDA: Label-Free Drift Adaptation for Windows Malware DetectionAdrian Shuai Li, and Elisa BertinoarXiv preprint arXiv:2511.14963, 2025

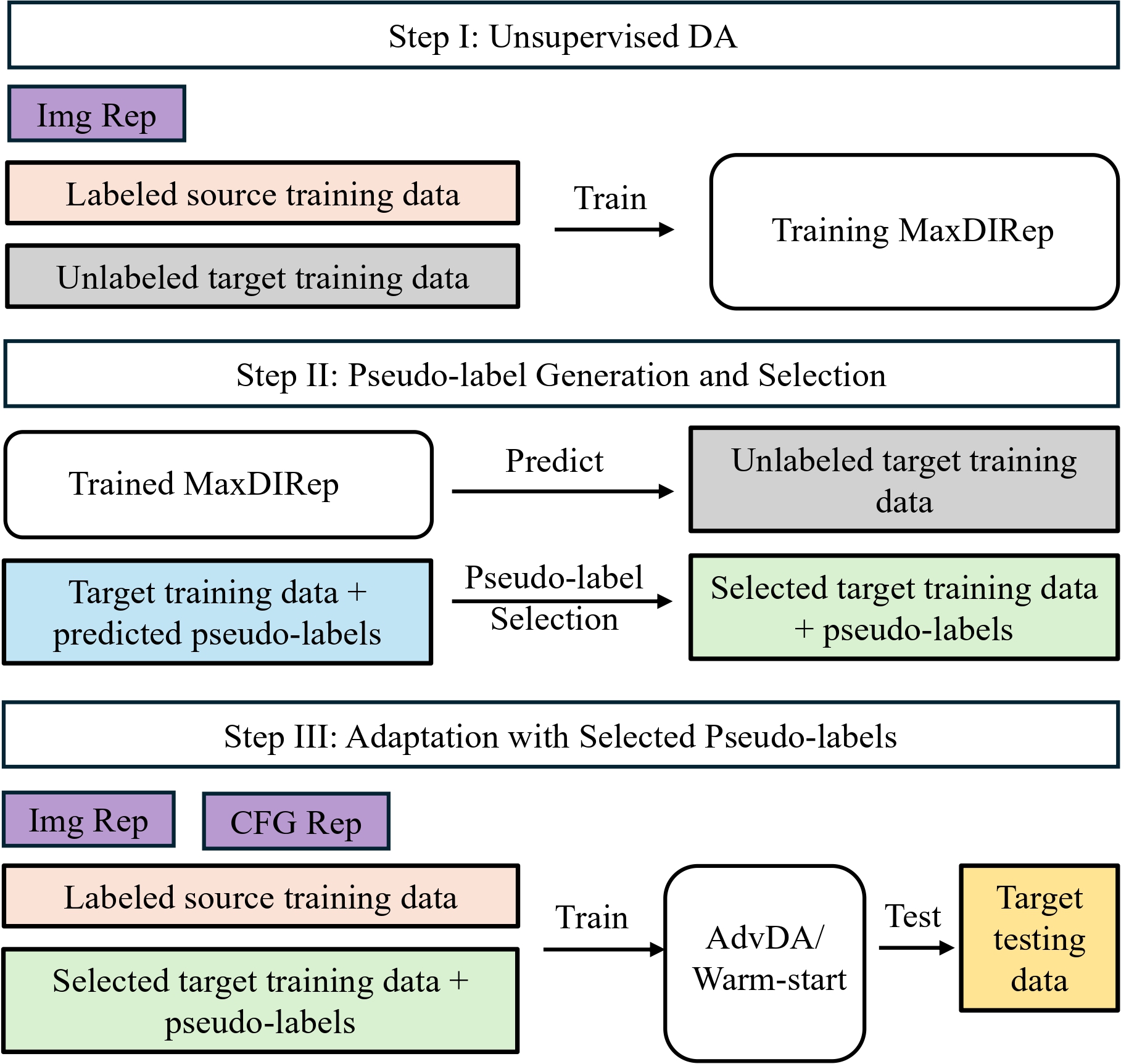

LFreeDA: Label-Free Drift Adaptation for Windows Malware DetectionAdrian Shuai Li, and Elisa BertinoarXiv preprint arXiv:2511.14963, 2025Machine learning (ML)-based malware detectors degrade over time as concept drift introduces new and evolving families unseen during training. Retraining is limited by the cost and time of manual labeling or sandbox analysis. Existing approaches mitigate this via drift detection and selective labeling, but fully label-free adaptation remains largely unexplored. Recent self-training methods use a previously trained model to generate pseudo-labels for unlabeled data and then train a new model on these labels. The unlabeled data are used only for inference and do not participate in training the earlier model. We argue that these unlabeled samples still carry valuable information that can be leveraged when incorporated appropriately into training. This paper introduces LFreeDA, an end-to-end framework that adapts malware classifiers to drift without manual labeling or drift detection. LFreeDA first performs unsupervised domain adaptation on malware images, jointly training on labeled and unlabeled samples to infer pseudo-labels and prune noisy ones. It then adapts a classifier on CFG representations using the labeled and selected pseudo-labeled data, leveraging the scalability of images for pseudo-labeling and the richer semantics of CFGs for final adaptation. Evaluations on the real-world MB-24+ dataset show that LFreeDA improves accuracy by up to 12.6% and F1 by 11.1% over no-adaptation lower bounds, and is only 4% and 3.4% below fully supervised upper bounds in accuracy and F1, respectively. It also matches the performance of state-of-the-art methods provided with ground truth labels for 300 target samples. Additional results on two controlled-drift benchmarks further confirm that LFreeDA maintains malware detection performance as malware evolves without human labeling.

@article{li2025lfreeda, author = {Li, Adrian Shuai and Bertino, Elisa}, title = {LFreeDA: Label-Free Drift Adaptation for Windows Malware Detection}, journal = {arXiv preprint arXiv:2511.14963}, year = {2025}, }

2024

- US Patent App.Autoencoder with generative adversarial networks for transfer learning between domainsMark Wegman, Yuhai Tu, Xuan-Hong Dang, Ankush Singla, and 1 more authorUS Patent App. 18/129,540 , 2024

-

Transfer Learning for Security: Challenges and Future DirectionsAdrian Shuai Li, Arun Iyengar, Ashish Kundu, and Elisa BertinoarXiv preprint arXiv:2403.00935, 2024

Transfer Learning for Security: Challenges and Future DirectionsAdrian Shuai Li, Arun Iyengar, Ashish Kundu, and Elisa BertinoarXiv preprint arXiv:2403.00935, 2024Many machine learning and data mining algorithms rely on the assumption that the training and testing data share the same feature space and distribution. However, this assumption may not always hold. For instance, there are situations where we need to classify data in one domain, but we only have sufficient training data available from a different domain. The latter data may follow a distinct distribution. In such cases, successfully transferring knowledge across domains can significantly improve learning performance and reduce the need for extensive data labeling efforts. Transfer learning (TL) has thus emerged as a promising framework to tackle this challenge, particularly in security-related tasks. This paper aims to review the current advancements in utilizing TL techniques for security. The paper includes a discussion of the existing research gaps in applying TL in the security domain, as well as exploring potential future research directions and issues that arise in the context of TL-assisted security solutions.

@article{li2024transfer, title = {Transfer Learning for Security: Challenges and Future Directions}, author = {Li, Adrian Shuai and Iyengar, Arun and Kundu, Ashish and Bertino, Elisa}, journal = {arXiv preprint arXiv:2403.00935}, year = {2024}, } -

Overcoming the lack of labeled data: Training malware detection models using adversarial domain adaptationSonam Bhardwaj, Adrian Shuai Li, Mayank Dave, and Elisa BertinoComputers & Security, 2024

Overcoming the lack of labeled data: Training malware detection models using adversarial domain adaptationSonam Bhardwaj, Adrian Shuai Li, Mayank Dave, and Elisa BertinoComputers & Security, 2024Many current malware detection methods are based on supervised learning techniques, which however have certain limitations. First, these techniques require a large amount of labeled data for training which is often difficult to obtain. Second, they are not very effective when there are differences in domain distribution between new malware and known malware. To address these issues, we propose MD-ADA – a malware detection framework that leverages adversarial domain adaptation (DA). DA allows one to adapt a training malware dataset available at a domain, referred to as the source, for training a classifier in another domain, referred to as the target. DA, typically used when the target has limited training malware data available, maps the source and target datasets into a common latent space. As we use an image representation for malware binaries, MD-ADA uses a convolution neural network (CNN) providing a lossless image embedding for the source and target datasets. MD-ADA also employs a generative adversarial network (GAN) for malware classification that is suitable for scenarios with few target-labeled data where the distribution of the features is similar (homogeneous) or different (heterogeneous). We have carried out several experiments to assess the performance of MD-ADA. The experiments show that MD-ADA outperforms the fine-tuning approach with an accuracy of 99.29% on the BODMAS dataset, 89.3% for the Malevis dataset on homogeneous feature distribution, and 90.12% on the CICMalMem2022 dataset (Target) and 83.23% on the Microsoft Kaggle dataset (Target) for heterogeneous feature distribution. The observed F1-scores of 99.13% and 87.5% for homogeneous feature distributions and 91.27% and 81.7% for heterogeneous distributions indicate that the MD-ADA performance is satisfactory for both data distributions when the target has very few labeled data.

@article{bhardwaj2024overcoming, title = {Overcoming the lack of labeled data: Training malware detection models using adversarial domain adaptation}, author = {Bhardwaj, Sonam and Li, Adrian Shuai and Dave, Mayank and Bertino, Elisa}, journal = {Computers \& Security}, volume = {140}, pages = {103769}, year = {2024}, publisher = {Elsevier}, } -

Adversarial Domain Adaptation for Metal Cutting Sound Detection: Leveraging Abundant Lab Data for Scarce Industry DataMir Imtiaz Mostafiz, Eunseob Kim, Adrian Shuai Li, Elisa Bertino, and 2 more authorsIn 2024 IEEE 22nd International Conference on Industrial Informatics (INDIN), 2024

Adversarial Domain Adaptation for Metal Cutting Sound Detection: Leveraging Abundant Lab Data for Scarce Industry DataMir Imtiaz Mostafiz, Eunseob Kim, Adrian Shuai Li, Elisa Bertino, and 2 more authorsIn 2024 IEEE 22nd International Conference on Industrial Informatics (INDIN), 2024Cutting state monitoring in the milling process is crucial for improving manufacturing efficiency and tool life. Cutting sound detection using machine learning (ML) models, inspired by experienced machinists, can be employed as a cost-effective and non-intrusive monitoring method in a complex manufacturing environment. However, labeling industry data for training is costly and time-consuming. Moreover, industry data is often scarce. In this study, we propose a novel adversarial domain adaptation (DA) approach to leverage abundant lab data to learn from scarce industry data, both labeled, for training a cutting-sound detection model. Rather than adapting the features from separate domains directly, we project them first into two separate latent spaces that jointly work as the feature space for learning domain-independent representations. We also analyze two different mechanisms for adversarial learning where the discriminator works as an adversary and a critic in separate settings, enabling our model to learn expressive domain-invariant and domainingrained features, respectively. We collected cutting sound data from multiple sensors in different locations, prepared datasets from lab and industry domain, and evaluated our learning models on them. Experiments showed that our models outperformed the multi-layer perceptron based vanilla domain adaptation models in labeling tasks on the curated datasets, achieving near 92 %, 82 % and 85% accuracy respectively for three different sensors installed in industry settings.

@inproceedings{imtiaz2024adversarial, title = {Adversarial Domain Adaptation for Metal Cutting Sound Detection: Leveraging Abundant Lab Data for Scarce Industry Data}, author = {Imtiaz Mostafiz, Mir and Kim, Eunseob and Li, Adrian Shuai and Bertino, Elisa and Jun, Martin Byung-Guk and Shakouri, Ali}, booktitle = {2024 IEEE 22nd International Conference on Industrial Informatics (INDIN)}, pages = {1--8}, year = {2024}, organization = {IEEE}, }

2023

-

Building Manufacturing Deep Learning Models with Minimal and Imbalanced Training Data Using Domain Adaptation and Data AugmentationAdrian Shuai Li, Elisa Bertino, Rih-Teng Wu, and Ting-Yan WuIn 2023 IEEE International Conference on Industrial Technology (ICIT), 2023

Building Manufacturing Deep Learning Models with Minimal and Imbalanced Training Data Using Domain Adaptation and Data AugmentationAdrian Shuai Li, Elisa Bertino, Rih-Teng Wu, and Ting-Yan WuIn 2023 IEEE International Conference on Industrial Technology (ICIT), 2023Deep learning (DL) techniques are highly effective for defect detection from images. Training DL classification models, however, requires vast amounts of labeled data which is often expensive to collect. In many cases, not only the available training data is limited but may also imbalanced. In this paper, we propose a novel domain adaptation (DA) approach to address the problem of labeled training data scarcity for a target learning task by transferring knowledge gained from an existing source dataset used for a similar learning task. Our approach works for scenarios where the source dataset and the dataset available for the target learning task have same or different feature spaces. We combine our DA approach with an autoencoder-based data augmentation approach to address the problem of imbalanced target datasets. We evaluate our combined approach using image data for wafer defect prediction. The experiments show its superior performance against other algorithms when the number of labeled samples in the target dataset is significantly small and the target dataset is imbalanced.

@inproceedings{li2023building, title = {Building Manufacturing Deep Learning Models with Minimal and Imbalanced Training Data Using Domain Adaptation and Data Augmentation}, author = {Li, Adrian Shuai and Bertino, Elisa and Wu, Rih-Teng and Wu, Ting-Yan}, booktitle = {2023 IEEE International Conference on Industrial Technology (ICIT)}, pages = {1--8}, year = {2023}, organization = {IEEE}, } -

Machine Learning Techniques for CybersecurityElisa Bertino, Sonam Bhardwaj, Fabrizio Cicala, Sishuai Gong, and 5 more authorsSpringer Nature Synthesis Lectures on Information Security, Privacy, and Trust , 2023

Machine Learning Techniques for CybersecurityElisa Bertino, Sonam Bhardwaj, Fabrizio Cicala, Sishuai Gong, and 5 more authorsSpringer Nature Synthesis Lectures on Information Security, Privacy, and Trust , 2023This book explores machine learning (ML) defenses against the many cyberattacks that make our workplaces, schools, private residences, and critical infrastructures vulnerable as a consequence of the dramatic increase in botnets, data ransom, system and network denials of service, sabotage, and data theft attacks. The use of ML techniques for security tasks has been steadily increasing in research and also in practice over the last 10 years. Covering efforts to devise more effective defenses, the book explores security solutions that leverage machine learning (ML) techniques that have recently grown in feasibility thanks to significant advances in ML combined with big data collection and analysis capabilities. Since the use of ML entails understanding which techniques can be best used for specific tasks to ensure comprehensive security, the book provides an overview of the current state of the art of ML techniques for security and a detailed taxonomy of security tasks and corresponding ML techniques that can be used for each task. It also covers challenges for the use of ML for security tasks and outlines research directions. While many recent papers have proposed approaches for specific tasks, such as software security analysis and anomaly detection, these approaches differ in many aspects, such as with respect to the types of features in the model and the dataset used for training the models. In a way that no other available work does, this book provides readers with a comprehensive view of the complex area of ML for security, explains its challenges, and highlights areas for future research. This book is relevant to graduate students in computer science and engineering as well as information systems studies, and will also be useful to researchers and practitioners who work in the area of ML techniques for security tasks.

@book{bertino2023machine, title = {Machine Learning Techniques for Cybersecurity}, author = {Bertino, Elisa and Bhardwaj, Sonam and Cicala, Fabrizio and Gong, Sishuai and Karim, Imtiaz and Katsis, Charalampos and Lee, Hyunwoo and Li, Adrian Shuai and Mahgoub, Ashraf Y}, year = {2023}, publisher = {Springer}, }

2022

-

A capability-based distributed authorization system to enforce context-aware permission sequencesAdrian Shuai Li, Reihaneh Safavi-Naini, and Philip WL FongIn Proceedings of the 27th ACM on Symposium on Access Control Models and Technologies, 2022

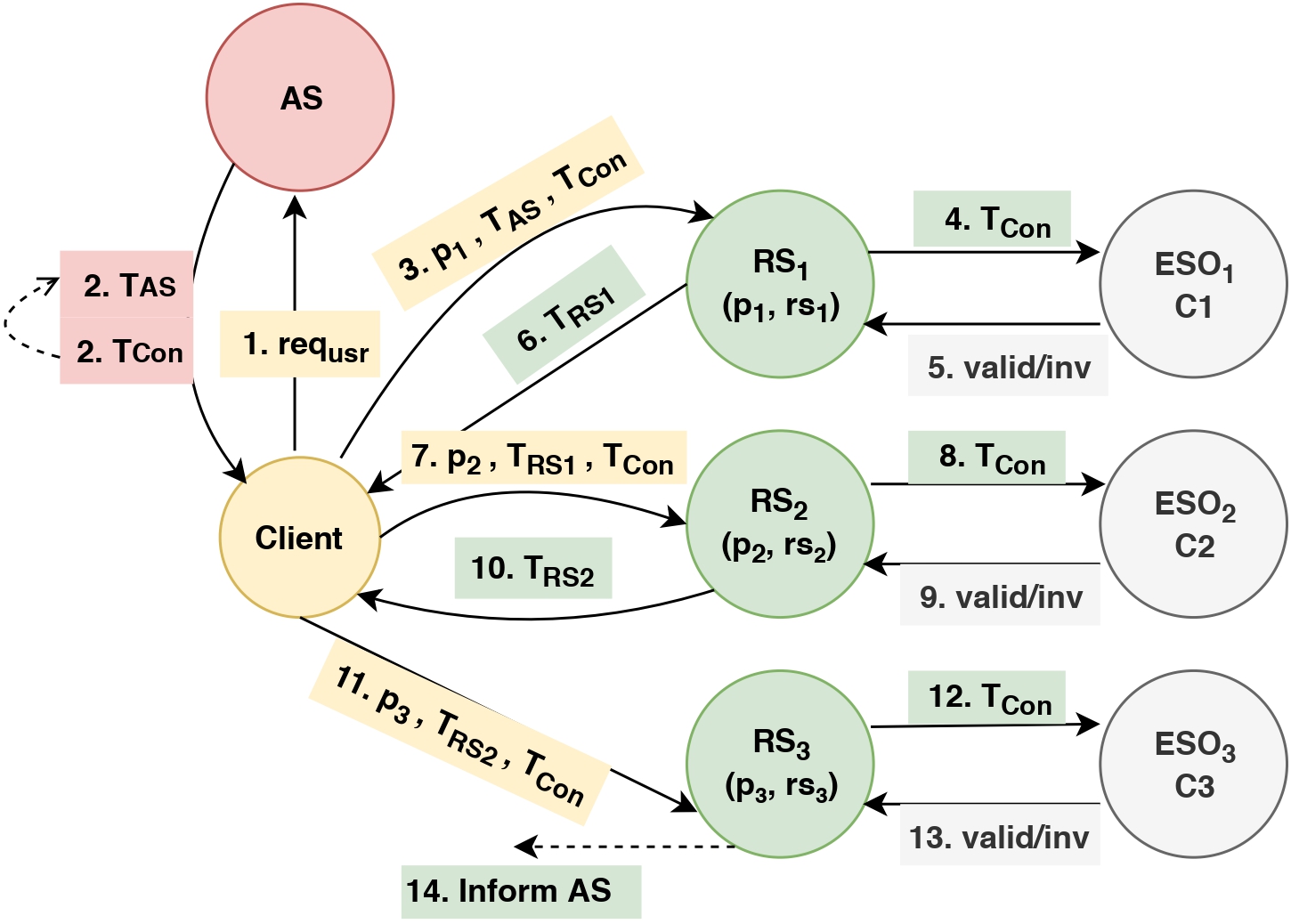

A capability-based distributed authorization system to enforce context-aware permission sequencesAdrian Shuai Li, Reihaneh Safavi-Naini, and Philip WL FongIn Proceedings of the 27th ACM on Symposium on Access Control Models and Technologies, 2022Controlled sharing is fundamental to distributed systems. We consider a capability-based distributed authorization system where a client receives capabilities (access tokens) from an authorization server to access the resources of resource servers. Capability-based authorization systems have been widely used on the Web, in mobile applications and other distributed systems. A common requirement of such systems is that the user uses tokens of multiple servers in a particular order. A related requirement is the token may be used if certain environmental conditions hold. We introduce a secure capability-based system that supports "permission sequence" and "context". This allows a finite sequence of permissions to be enforced, each with their own specific context. We prove the safety property of this system for these conditions and integrate the system into OAuth 2.0 with proof-of-possession tokens. We evaluate our implementation and compare it with plain OAuth with respect to the average time for obtaining an authorization token and acquiring access to the resource.

@inproceedings{li2022capability, title = {A capability-based distributed authorization system to enforce context-aware permission sequences}, author = {Li, Adrian Shuai and Safavi-Naini, Reihaneh and Fong, Philip WL}, booktitle = {Proceedings of the 27th ACM on Symposium on Access Control Models and Technologies}, pages = {195--206}, year = {2022}, }

2020

-

Secure logging with security against adaptive crash attackSepideh Avizheh, Reihaneh Safavi-Naini, and Shuai LiIn Foundations and Practice of Security: 12th International Symposium, FPS 2019, Toulouse, France, November 5–7, 2019, Revised Selected Papers 12, 2020

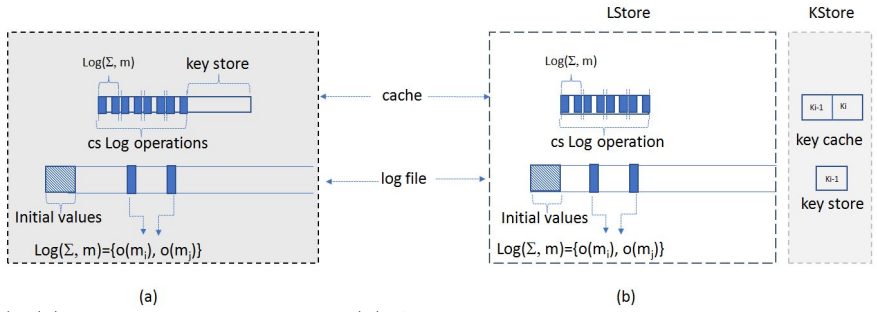

Secure logging with security against adaptive crash attackSepideh Avizheh, Reihaneh Safavi-Naini, and Shuai LiIn Foundations and Practice of Security: 12th International Symposium, FPS 2019, Toulouse, France, November 5–7, 2019, Revised Selected Papers 12, 2020Logging systems are an essential component of security systems and their security has been widely studied. Recently (2017) it was shown that existing secure logging protocols are vulnerable to crash attack in which the adversary modifies the log file and then crashes the system to make it indistinguishable from a normal system crash. The attacker was assumed to be non-adaptive and not be able to see the file content before modifying and crashing it (which will be immediately after modifying the file). The authors also proposed a system called SLiC that protects against this attacker. In this paper, we consider an (insider) adaptive adversary who can see the file content as new log operations are performed. This is a powerful adversary who can attempt to rewind the system to a past state. We formalize security against this adversary and introduce a scheme with provable security. We show that security against this attacker requires some (small) protected memory that can become accessible to the attacker after the system compromise. We show that existing secure logging schemes are insecure in this setting, even if the system provides some protected memory as above. We propose a novel mechanism that, in its basic form, uses a pair of keys that evolve at different rates, and employ this mechanism in an existing logging scheme that has forward integrity to obtain a system with provable security against adaptive (and hence non-adaptive) crash attack. We implemented our scheme on a desktop computer and a Raspberry Pi, and showed in addition to higher security, a significant efficiency gain over SLiC.

@inproceedings{avizheh2020secure, title = {Secure logging with security against adaptive crash attack}, author = {Avizheh, Sepideh and Safavi-Naini, Reihaneh and Li, Shuai}, booktitle = {Foundations and Practice of Security: 12th International Symposium, FPS 2019, Toulouse, France, November 5--7, 2019, Revised Selected Papers 12}, pages = {137--155}, year = {2020}, organization = {Springer}, }

2018

-

Towards a resilient smart homeTam Thanh Doan, Reihaneh Safavi-Naini, Shuai Li, Sepideh Avizheh, and 2 more authorsIn Proceedings of the 2018 workshop on IoT security and privacy, 2018

Towards a resilient smart homeTam Thanh Doan, Reihaneh Safavi-Naini, Shuai Li, Sepideh Avizheh, and 2 more authorsIn Proceedings of the 2018 workshop on IoT security and privacy, 2018Best Paper Award

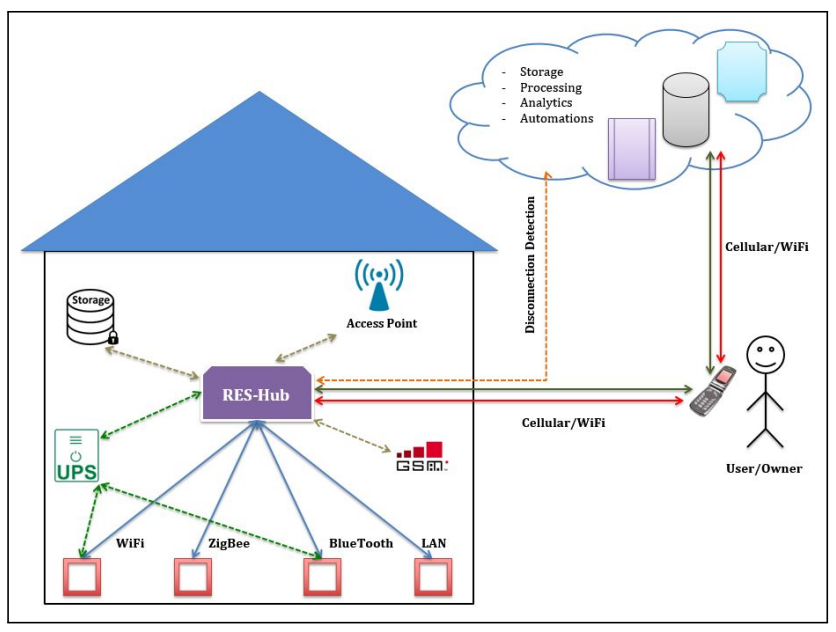

Today’s Smart Home platforms such as Samsung SmartThings and Amazon AWS IoT are primarily cloud based: devices in the home sense the environment and send the collected data, directly or through a hub, to the cloud. Cloud runs various applications and analytics on the collected data, and generates commands according to the users’ specifications that are sent to the actuators to control the environment. The role of the hub in this setup is effectively message passing between the devices and the cloud, while the required analytics, computation, and control are all performed by the cloud. We ask the following question: what if the cloud is not available? This can happen not only by accident or natural causes, but also due to targeted attacks. We discuss possible effects of such unavailability on the functionalities that are commonly available in smart homes, including security and safety related services as well as support for health and well-being of home users, and propose RES-Hub, a hub that can provide the required functionalities when the cloud is unavailable. During the normal functioning of the system, RES-Hub will receive regular status updates from cloud, and will use this information to continue to provide the user specified services when it detects the cloud is down. We describe an IoTivity-based software architecture that is used to implement RES-Hub in a flexible and expendable way and discuss our implementation.

@inproceedings{doan2018towards, title = {Towards a resilient smart home}, author = {Doan, Tam Thanh and Safavi-Naini, Reihaneh and Li, Shuai and Avizheh, Sepideh and K, Muni Venkateswarlu and Fong, Philip WL}, booktitle = {Proceedings of the 2018 workshop on IoT security and privacy}, pages = {15--21}, year = {2018}, }